Every server built by my team in the last 3 years gets automatically added to our Nagios monitoring. We monitor the heck out of everything, and Nagios is configured to send us an alert email when something needs our attention.

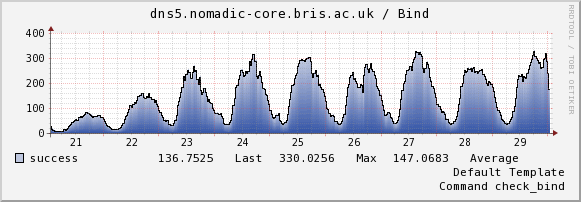

Recently we experienced an issue with some servers which are used to authenticate a large volume of users on a high profile service, nagios spotted the issue and sent us an email – which was then delayed and didn’t actually hit our inboxes until 1.5hrs after the fault developed. That’s 1.5hrs of downtime on a service, in the middle of the day, because our alerting process failed us.

This is clearly Not Good Enough(tm) so we’re stepping it up a notch.

With help from David Gardner we’ve added Google Talk alerts to our Nagios instance. Now when something goes wrong, as well as getting an email about it, my instant messenger client goes “bong!” and starts flashing. Win!

If you squint at it in the right way, on a good day, Google Talk is pretty much Jabber or XMPP and that appears to be fairly easy to automate.

To do this, you need three things things:

- An account for Nagios to send the alerts from

- A script which sends the alerts

- Some Nagios config to tie it all together

XMPP Account

We created a free gmail.com account for this step. If you prefer, you could use another provider such as jabber.org. I would strongly recommend you don’t re-use any account you use for email or anything. Keep it self contained (and certainly don’t use your UoB gmail account!)

Once you’ve created that account, sign in to it and send an invite to the accounts you want the alerts to be sent to. This establishes a trust relationship between the accounts so the messages get through.

The Script

This is a modified version of a perl script David Gardner helped us out with. It uses the Net::XMPP perl module to send the messages. I prefer to install my perl modules via yum or apt (so that they get updated like everything else) but you can install it from CPAN if you prefer. Whatever floats your boat. On CentOS it’s the perl-Net-XMPP package, and on Debian/Ubuntu it’s libnet-xmpp-perl

#!/usr/bin/perl -T

use strict;

use warnings;

use Net::XMPP;

use Getopt::Std;

my %opts;

getopts('f:p:r:t:m:', \%opts);

my $from = $opts{f} or usage();

my $password = $opts{p} or usage();

my $resource = $opts{r} || "nagios";

my $recipients = $opts{t} or usage();

my $message = $opts{m} or usage();

unless ($from =~ m/(.*)@(.*)/gi) {

usage();

}

my ($username, $componentname) = ($1,$2);

my $conn = Net::XMPP::Client->new;

my $status = $conn->Connect(

hostname => 'talk.google.com',

port => 443,

componentname => $componentname,

connectiontype => 'http',

tls => 0,

ssl => 1,

);

# Change hostname

my $sid = $conn->{SESSION}->{id};

$conn->{STREAM}->{SIDS}->{$sid}->{hostname} = $componentname;

die "Connection failed: $!" unless defined $status;

my ( $res, $msg ) = $conn->AuthSend(

username => $username,

password => $password,

resource => $resource, # client name

);

die "Auth failed ", defined $msg ? $msg : '', " $!"

unless defined $res and $res eq 'ok';

foreach my $recipient (split(',', $recipients)) {

$conn->MessageSend(

to => $recipient,

resource => $resource,

subject => 'message via ' . $resource,

type => 'chat',

body => $message,

);

}

sub usage {

print qq{

$0 - Usage

-f "from account" (eg nagios\@myhost.org)

-p password

-r resource (default is "nagios")

-t "to account" (comma separated list or people to send the message to)

-m "message"

};

exit;

The Nagios Config

You need to install the script in a place where Nagios can execute it. Usually the place where your Nagios check plugins are installed is fine – on our systems that’s /usr/lib64/nagios/plugins.

Now we need to make Nagios aware of this plugin by defining a command. In your command.cfg, add two blocks like this (one for service notifications, one for host notifications). Replace <username> with the gmail account you registered, excluding the suffix, and replace <password> with the account password.

define command {

command_name notify-by-xmpp

command_line $USER1$/notify_by_xmpp -f <username@gmail.com> -p <password> -t $CONTACTEMAIL$ -m "$NOTIFICATIONTYPE$ $HOSTNAME$ $SERVICEDESC$ $SERVICESTATE$ $SERVICEOUTPUT$ $LONGDATETIME$"

}

define command {

command_name host-notify-by-xmpp

command_line $USER1$/notify_by_xmpp -f <username@gmail.com> -p <password> -t $CONTACTEMAIL$ -m "Host $HOSTALIAS$ is $HOSTSTATE$ - Info"

}

This command uses the Nagios user’s registered email address for XMPP as well as email. For this to work you will probably have to use the short (non-aliased) version of the email address, i.e. ab12345@bristol.ac.uk rather than alpha.beta@bristol.ac.uk. Change this in contact.cfg if necessary.

With the script in place and registered with Nagios, we just need to tell Nagios to use it. You can enable it per-user or just enable it for everyone. In this example we’ll enable it for everyone.

Look in templates.cfg and edit the generic contact template to look like this. Relevant changes are in bold.

define contact{

name generic-contact

service_notification_period 24x7

host_notification_period 24x7

service_notification_options w,c,r,f,s

host_notification_options d,u,r,f,s

service_notification_commands notify-service-by-email, notify-service-by-xmpp

host_notification_commands notify-host-by-email, notify-by-xmpp

register 0

}

Restart Nagios for the changes to take effect. Now break something, and wait for your IM client to receive the notification.